人工智能专栏文章汇总:人工智能学习专栏文章汇总-CSDN博客

本篇目录

一、词向量处理

1.01 词袋模型(Bag-of-words model)

1.02 simtext

1.03 百度飞桨(paddlenlp.embeddings)

1.04 百度千帆SDK(qianfan.Embedding)

1.2 SentenceTransformers(资源国内可访问)

1.2.1 句向量生成(SentenceTransformer)

1.2.1 文本相似度比较(util.cos_sim)

1.2.3 文本匹配搜索(util.semantic_search)

1.2.4 相近语义挖掘(util.paraphrase_mining)

1.2.5 图文搜索

1.3 text2vec

1.3.1 句向量生成

Word2Vec

SentenceModel

1.3.2 文本相似度比较(Similarity)

1.3.3 文本匹配搜索(semantic_search)

1.5 HuggingFace Transformers

二、基于BERT预训练模型+微调完成NLP主流任务

2.1 任务说明

2.2 数据准备

2.1.1 数据加载

2.1.2 转换数据格式

2.1.3 构建DataLoader

2.2 模型构建

2.3 训练配置

2.4 模型训练与评估

2.5 模型测试

本文主要介绍NLP中常用的词向量处理方法和基于NLP预训练模型的微调方法。

自然语言处理( Natural Language Processing, NLP)是计算机科学领域与人工智能领域中的一个重要方向。NLP下游任务主要包括:机器翻译、舆情监测、自动摘要、观点提取、文本分类、问题回答、文本语义对比、语音识别、中文OCR等方面。

自然语言是天然具有上下文序列关系的表达方式。针对自然语言处理(NLP),科学家们一步一步发展的模型包括RNN,LSTM,Transformers,BERT, GPT,等等。

其中,BERT 模型的预训练任务主要为模拟人类的完形填空任务,在这种预训练方法下,模型需要同时关注上下文间的信息,从而得出当前位置的 token。另一种较强的 NLP模型GPT,则使用了自回归的方法来训练,也就是说,模型仅可通过当前位置之前的字段来推理当前位置的 token。

如上一篇所述,要实现NLP任务,首先我们需要对文本进行向量化处理。

一、词向量处理

NLP的文本向量处理主要是指将原始文本转换成词向量和句向量,方便做词语和句子之间的语义匹配,搜索等NLP任务。我尝试过整理出来的文本向量处理工具如下:

1.01 词袋模型(Bag-of-words model)

词袋模型(Bag-of-words model)是用于自然语言处理和信息检索中的一种简单的文档处理方法。通过这一模型,一篇文档可以通过统计所有单词的数目来表示,这种方法不考虑语法和单词出现的先后顺序。这一模型在文档分类里广为应用,通过统计每个单词的出现次数(频率)作为分类器的特征。

如下两篇简单的文本文档:

Jane wants to go to Shenzhen.

Bob wants to go to Shanghai.

基于这两篇文档我们可以构建一个字典:

{‘Jane’:1, ‘wants’:2, ‘to’:4, ‘go’:2, ‘Shenzhen’:1, ‘Bob’:1, ‘Shanghai’:1}

我们可将两篇文档表示为如下的向量:

例句1:[1,1,2,1,1,0,0]

例句2:[0,1,2,1,0,1,1]

词袋模型实际就是把文档表示成向量,其中向量的维数就是字典所含词的个数,在上例中,向量中的第i个元素就是统计该文档中对应字典中的第i个单词出现的个数,因此可认为词袋模型就是统计词频直方图的简单文档表示方法。

词袋模型的思路还可以用于处理图像分类,可以参考:词袋模型(Bag-of-words model)-CSDN博客

1.02 simtext

simtext可以计算两文档间四大文本相似性指标,分别为:

- Sim_Cosine cosine相似性(余弦相似度,常用)

- Sim_Jaccard Jaccard相似性

- Sim_MinEdit 最小编辑距离

- Sim_Simple 微软Word中的track changes

它的好处是不需要下载预训练模型,直接用pip安装即可使用:

pip install simtext

中文文本相似性代码:

from simtext import similarity text1 = '在宏观经济背景下,为继续优化贷款结构,重点发展可以抵抗经济周期不良的贷款' text2 = '在宏观经济背景下,为继续优化贷款结构,重点发展可三年专业化、集约化、综合金融+物联网金融四大金融特色的基础上' sim = similarity() res = sim.compute(text1, text2) print(res)

打印结果:

{'Sim_Cosine': 0.46475800154489, 'Sim_Jaccard': 0.3333333333333333, 'Sim_MinEdit': 29, 'Sim_Simple': 0.9889595182335229}英文文本相似性代码:

from simtext import similarity A = 'We expect demand to increase.' B = 'We expect worldwide demand to increase.' C = 'We expect weakness in sales' sim = similarity() AB = sim.compute(A, B) AC = sim.compute(A, C) print(AB) print(AC)

打印结果:

{'Sim_Cosine': 0.9128709291752769, 'Sim_Jaccard': 0.8333333333333334, 'Sim_MinEdit': 2, 'Sim_Simple': 0.9545454545454546} {'Sim_Cosine': 0.39999999999999997, 'Sim_Jaccard': 0.25, 'Sim_MinEdit': 4, 'Sim_Simple': 0.9315789473684211}1.03 百度飞桨(paddlenlp.embeddings)

首先使用 pip install -U paddlenlp 安装 paddlenlp 包。

词向量

使用百度飞桨的paddlenlp embeddings的预训练模型,可以直接获得一个单词的词向量,并可对词向量进行相似度比较。代码如下:

from paddlenlp.embeddings import TokenEmbedding # 初始化TokenEmbedding, 预训练embedding未下载时会自动下载并加载数据 token_embedding = TokenEmbedding(embedding_name="w2v.baidu_encyclopedia.target.word-word.dim300") # 查看token_embedding详情 #print(token_embedding) #获得词向量 test_token_embedding = token_embedding.search("中国") #print(test_token_embedding) #比较词向量 score1 = token_embedding.cosine_sim("女孩", "女皇") score2 = token_embedding.cosine_sim("女孩", "小女孩") score3 = token_embedding.cosine_sim("女孩", "中国") print('score1:', score1) print('score2:', score2) print('score3:', score3) ---------------------------------------------------------------------------- score1: 0.32632214 score2: 0.7869123 score3: 0.15649165句向量

句向量有一种比较简单粗暴的方式,就是将句子里的所有词向量相加,但是这种方式获得的向量不能很好的表述句子的意思,准确度不高。

# 初始化TokenEmbedding, 预训练embedding没下载时会自动下载并加载数据 token_embedding = TokenEmbedding(embedding_name="w2v.baidu_encyclopedia.target.word-word.dim300") # 查看token_embedding详情 #print(token_embedding) tokenizer = JiebaTokenizer(vocab=token_embedding.vocab) def get_sentence_embedding(text): # 分词 words = tokenizer.cut(text) print(words) # 获取词向量 word_embeddings = token_embedding.search(words) #print(word_embeddings) # 通过词向量相加,计算句向量 sentence_embedding = np.sum(word_embeddings, axis=0) / len(words) #print(sentence_embedding) return sentence_embedding text1 = "飞桨是优秀的深度学习平台" text2 = "我喜欢喝咖啡" sen_emb1 = get_sentence_embedding(text1) print("句向量1:\n", sen_emb1.shape) sen_emb2 = get_sentence_embedding(text2) print("句向量2:\n", sen_emb2.shape) sim = F.cosine_similarity(paddle.to_tensor(sen_emb1).unsqueeze(0), paddle.to_tensor(sen_emb2).unsqueeze(0)) print("Similarity: {:.5f}".format(sim.item()))1.04 百度千帆SDK(qianfan.Embedding)

百度千帆大模型SDK也提供了词向量的API。首先安装千帆SDK:

pip install qianfan -U

调用方法如下:

# Embedding 基础功能 import qianfan # 替换下列示例中参数,应用API Key替换your_ak,Secret Key替换your_sk emb = qianfan.Embedding(ak="your_ak", sk="your_sk") resp = emb.do(texts=[ # 省略 model 时则调用默认模型 Embedding-V1 "世界上最高的山" ])1.2 SentenceTransformers(资源国内可访问)

SentenceTransformers是Python里用于对文本图像进行向量操作的库。

(官网:SentenceTransformers Documentation — Sentence-Transformers documentation)

首先使用 pip install -U sentence_transformers 安装 sentence_transformers 包。

这个库提供的生成词向量的方法是使用BERT算法,对句意的表达比较准确。可以用于文本的向量生成,相似度比较,匹配等任务。

这个包的模型资源目前在国内是可以访问的,可以直接下载到本地:

https://public.ukp.informatik.tu-darmstadt.de/reimers/sentence-transformers/v0.2/

然后查找paraphrase-multilingual-MiniLM-L12-v2这个模型名字,点击下载即可。

1.2.1 句向量生成(SentenceTransformer)

可以用sentence_transformers包里的SentenceTransformer来生成句向量。

示例代码:

import sys from sentence_transformers.util import cos_sim from sentence_transformers import SentenceTransformer as SBert #model = SBert('paraphrase-multilingual-MiniLM-L12-v2') model = SBert("C:\\Users\\aric\\.models\\paraphrase-multilingual-MiniLM-L12-v2") # Two lists of sentences sentences1 = ['如何更换花呗绑定银行卡', 'The cat sits outside', 'A man is playing guitar', 'The new movie is awesome'] sentences2 = ['花呗更改绑定银行卡', 'The dog plays in the garden', 'A woman watches TV', 'The new movie is so great'] # Compute embedding for both lists embeddings1 = model.encode(sentences1) embeddings2 = model.encode(sentences2) print(type(embeddings1), embeddings1.shape) # The result is a list of sentence embeddings as numpy arrays for sentence, embedding in zip(sentences1, embeddings1): print("Sentence:", sentence) print("Embedding shape:", embedding.shape) print("Embedding head:", embedding[:10]) print() ----------------------------------------------------------------------------------- (4, 384) Sentence: 如何更换花呗绑定银行卡 Embedding shape: (384,) Embedding head: [-0.08839616 0.29445878 -0.25130653 -0.00759273 -0.0749087 -0.12786895 0.07136863 -0.01503289 -0.19017595 -0.12699445] Sentence: The cat sits outside Embedding shape: (384,) Embedding head: [ 0.45684573 -0.14459176 -0.0388849 0.2711025 0.0222025 0.2317232 0.14208616 0.13658428 -0.27846363 0.05661529] Sentence: A man is playing guitar Embedding shape: (384,) Embedding head: [-0.20837498 0.00522519 -0.23411965 -0.07861497 -0.35490423 -0.27809393 0.24954818 0.15160584 0.01028005 0.1939052 ] Sentence: The new movie is awesome Embedding shape: (384,) Embedding head: [-0.5378314 -0.36144564 -0.5304235 -0.20994733 -0.03825595 0.22604015 0.35931802 0.14547679 0.05396605 -0.08255189]1.2.1 文本相似度比较(util.cos_sim)

示例代码:

import sys from sentence_transformers.util import cos_sim from sentence_transformers import SentenceTransformer as SBert #model = SBert('paraphrase-multilingual-MiniLM-L12-v2') model = SBert("C:\\Users\\aric\\.models\\paraphrase-multilingual-MiniLM-L12-v2") # Two lists of sentences sentences1 = ['如何更换花呗绑定银行卡', 'The cat sits outside', 'A man is playing guitar', 'The new movie is awesome'] sentences2 = ['花呗更改绑定银行卡', 'The dog plays in the garden', 'A woman watches TV', 'The new movie is so great'] # Compute embedding for both lists embeddings1 = model.encode(sentences1) embeddings2 = model.encode(sentences2) print(type(embeddings1), embeddings1.shape) # The result is a list of sentence embeddings as numpy arrays """ for sentence, embedding in zip(sentences1, embeddings1): print("Sentence:", sentence) print("Embedding shape:", embedding.shape) print("Embedding head:", embedding[:10]) print() """ # Compute cosine-similarits cosine_scores_0 = cos_sim(embeddings1[0], embeddings2[0]) cosine_scores = cos_sim(embeddings1, embeddings2) print(cosine_scores_0) print(cosine_scores) --------------------------------------------------------------------------------------- (4, 384) tensor([[0.9477]]) tensor([[ 0.9477, -0.1748, -0.0839, -0.0044], [-0.0097, 0.1908, -0.0203, 0.0302], [-0.0010, 0.1062, 0.0055, 0.0097], [ 0.0302, -0.0160, 0.1321, 0.9591]]) Note:最后这个4x4的向量的对角线上的数值,代表每一对句向量的相似度结果)1.2.3 文本匹配搜索(util.semantic_search)

文本匹配搜索通过理解搜索查询的内容来提高搜索的准确性,而不是仅仅依赖于词汇匹配。这是利用句向量之间的相似性完成的。文本匹配搜索是将语料库中的所有条目(句子)嵌入到向量空间中。在搜索时,查询语句也会被嵌入到相同的向量空间中,并从语料库中找到最接近的向量。

示例代码:

from sentence_transformers import SentenceTransformer, util # Download model model = SentenceTransformer("C:\\Users\\aric\\.models\\paraphrase-multilingual-MiniLM-L12-v2") # Corpus of documents and their embeddings corpus = ['Python is an interpreted high-level general-purpose programming language.', 'Python is dynamically-typed and garbage-collected.', 'The quick brown fox jumps over the lazy dog.'] corpus_embeddings = model.encode(corpus) # Queries and their embeddings queries = ["What is Python?", "What did the fox do?"] queries_embeddings = model.encode(queries) # Find the top-2 corpus documents matching each query hits = util.semantic_search(queries_embeddings, corpus_embeddings, top_k=2) # Print results of first query print(f"Query: {queries[0]}") for hit in hits[0]: print(corpus[hit['corpus_id']], "(Score: {:.4f})".format(hit['score'])) # Query: What is Python? # Python is an interpreted high-level general-purpose programming language. (Score: 0.6759) # Python is dynamically-typed and garbage-collected. (Score: 0.6219) # Print results of second query print(f"Query: {queries[1]}") for hit in hits[1]: print(corpus[hit['corpus_id']], "(Score: {:.4f})".format(hit['score'])) --------------------------------------------------------------------------------------- 打印结果: Query: What is Python? Python is an interpreted high-level general-purpose programming language. (Score: 0.7616) Python is dynamically-typed and garbage-collected. (Score: 0.6267) Query: What did the fox do? The quick brown fox jumps over the lazy dog. (Score: 0.4893) Python is dynamically-typed and garbage-collected. (Score: 0.0746)1.2.4 相近语义挖掘(util.paraphrase_mining)

Paraphrase Mining是在大量句子中寻找相近释义的句子,即具有非常相似含义的文本。

这可以使用 util 模块的 paraphrase_mining 函数来实现。

from sentence_transformers import SentenceTransformer, util # Download model model = SentenceTransformer('all-MiniLM-L6-v2') # List of sentences sentences = ['The cat sits outside', 'A man is playing guitar', 'I love pasta', 'The new movie is awesome', 'The cat plays in the garden', 'A woman watches TV', 'The new movie is so great', 'Do you like pizza?', '我喜欢喝咖啡', '我爱喝咖啡', '我喜欢喝牛奶',] # Look for paraphrases paraphrases = util.paraphrase_mining(model, sentences) # Print paraphrases print("Top 5 paraphrases") for paraphrase in paraphrases[0:5]: score, i, j = paraphrase print("Score {:.4f} ---- {} ---- {}".format(score, sentences[i], sentences[j])) --------------------------------------------------------------------------------------- Top 5 paraphrases Score 0.9751 ---- 我喜欢喝咖啡 ---- 我爱喝咖啡 Score 0.9591 ---- The new movie is awesome ---- The new movie is so great Score 0.6774 ---- The cat sits outside ---- The cat plays in the garden Score 0.6384 ---- 我喜欢喝咖啡 ---- 我喜欢喝牛奶 Score 0.6007 ---- 我爱喝咖啡 ---- 我喜欢喝牛奶1.2.5 图文搜索

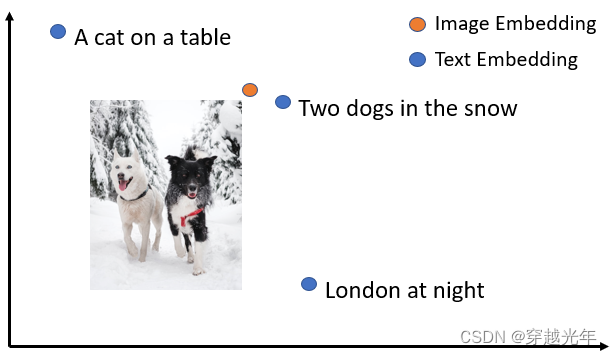

SentenceTransformers 提供允许将图像和文本嵌入到同一向量空间,通过这中模型可以找到相似的图像以及实现图像搜索,即使用文本搜索图像,反之亦然。

同一向量空间中的文本和图像示例:

要执行图像搜索,需要加载像 CLIP 这样的模型,并使用其encode 方法对图像和文本进行编码:

from sentence_transformers import SentenceTransformer, util from PIL import Image # Load CLIP model model = SentenceTransformer('clip-ViT-B-32') # Encode an image img_emb = model.encode(Image.open('two_dogs_in_snow.jpg')) # Encode text descriptions text_emb = model.encode(['Two dogs in the snow', 'A cat on a table', 'A picture of London at night']) # Compute cosine similarities cos_scores = util.cos_sim(img_emb, text_emb) print(cos_scores)更多可参考:[NLP] SentenceTransformers使用介绍_sentence transformer训练-CSDN博客

1.3 text2vec

这个好像是国内的开发者做的(据说里面是封装了sentence-transormers的内容)。同样也可以进行文本向量的生成,相似度比较,匹配等任务。

它的模型基本都发布在HuggingFace上,现在国内也无法正常访问。

1.3.1 句向量生成

Word2Vec

第一种方式,是使用text2vec包里的Word2Vec:

这种方式使用腾讯词向量Tencent_AILab_ChineseEmbedding(这个目前是可以下载的)计算各字词的词向量,句子向量通过单词词向量取平均值得到(这种方式无法保证句意的正确理解)

首先使用 pip install -U text2vec 安装 text2vec 包。

from text2vec import Word2Vec def compute_emb(model): # Embed a list of sentences sentences = [ '卡', '银行卡', '如何更换花呗绑定银行卡', '花呗更改绑定银行卡', 'This framework generates embeddings for each input sentence', 'Sentences are passed as a list of string.', 'The quick brown fox jumps over the lazy dog.' ] sentence_embeddings = model.encode(sentences) print(type(sentence_embeddings), sentence_embeddings.shape) # The result is a list of sentence embeddings as numpy arrays for sentence, embedding in zip(sentences, sentence_embeddings): print("Sentence:", sentence) print("Embedding shape:", embedding.shape) print("Embedding head:", embedding[:10]) print() # 中文词向量模型(word2vec),中文字面匹配任务和冷启动适用 w2v_model = Word2Vec("w2v-light-tencent-chinese") compute_emb(w2v_model) ------------------------------------------------------------------------------------ 打印结果: (7, 200) Sentence: 卡 Embedding shape: (200,) Embedding head: [ 0.06761453 -0.10960816 -0.04829824 0.0156597 -0.09412017 -0.04805465 -0.03369278 -0.07476041 -0.01600934 0.03106228] Sentence: 银行卡 Embedding shape: (200,) Embedding head: [ 0.01032454 -0.13564903 -0.00089282 0.02286329 -0.03501284 0.00987683 0.02884413 -0.03491557 0.02036332 0.04516884] Sentence: 如何更换花呗绑定银行卡 Embedding shape: (200,) Embedding head: [ 0.02396784 -0.13885356 0.00176219 0.02540027 0.00949343 -0.01486312 0.01011733 0.00190828 0.02708069 0.04316072] Sentence: 花呗更改绑定银行卡 Embedding shape: (200,) Embedding head: [ 0.00871027 -0.14244929 -0.00959482 0.03021128 0.01514321 -0.01624702 0.00260827 0.0131352 0.02293272 0.04481505] Sentence: This framework generates embeddings for each input sentence Embedding shape: (200,) Embedding head: [-0.08317478 -0.00601972 -0.06293213 -0.03963032 -0.0145333 -0.0549945 0.05606257 0.02389491 -0.02102496 0.03023159] Sentence: Sentences are passed as a list of string. Embedding shape: (200,) Embedding head: [-0.08008799 -0.01654172 -0.04550576 -0.03715633 0.00133283 -0.04776235 0.04780829 0.01377041 -0.01251951 0.02603387] Sentence: The quick brown fox jumps over the lazy dog. Embedding shape: (200,) Embedding head: [-0.08605123 -0.01434057 -0.06376401 -0.03962022 -0.00724643 -0.05585583 0.05175515 0.02725058 -0.01821304 0.02920807]w2v-light-tencent-chinese是通过gensim加载的Word2Vec模型,模型自动下载到本机路径:~/.text2vec/datasets/light_Tencent_AILab_ChineseEmbedding.bin

SentenceModel

第二种方式,是使用text2vec包里的SentenceModel方法(和SentenceTransformers类似):

import sys sys.path.append('..') from text2vec import SentenceModel def compute_emb(model): # Embed a list of sentences sentences = [ '卡', '银行卡', '如何更换花呗绑定银行卡', '花呗更改绑定银行卡', 'This framework generates embeddings for each input sentence', 'Sentences are passed as a list of string.', 'The quick brown fox jumps over the lazy dog.' ] sentence_embeddings = model.encode(sentences) print(type(sentence_embeddings), sentence_embeddings.shape) # The result is a list of sentence embeddings as numpy arrays for sentence, embedding in zip(sentences, sentence_embeddings): print("Sentence:", sentence) print("Embedding shape:", embedding.shape) print("Embedding head:", embedding[:10]) print() if __name__ == "__main__": # 中文句向量模型(CoSENT),中文语义匹配任务推荐,支持fine-tune继续训练 t2v_model = SentenceModel("shibing624/text2vec-base-chinese") compute_emb(t2v_model) # 支持多语言的句向量模型(CoSENT),多语言(包括中英文)语义匹配任务推荐,支持fine-tune继续训练 sbert_model = SentenceModel("shibing624/text2vec-base-multilingual") compute_emb(sbert_model)1.3.2 文本相似度比较(Similarity)

使用text2vec.Similarity可以直接比较文本的相似度,它默认会调用“shibing624/text2vec-base-chinese”模型产生文本句向量。但是也有同样的问题,模型资源是在HuggingFace上的,国内还是有无法访问的问题。

import sys sys.path.append('..') from text2vec import Similarity # Two lists of sentences sentences1 = ['如何更换花呗绑定银行卡', 'The cat sits outside', 'A man is playing guitar', 'The new movie is awesome'] sentences2 = ['花呗更改绑定银行卡', 'The dog plays in the garden', 'A woman watches TV', 'The new movie is so great'] sim_model = Similarity() for i in range(len(sentences1)): for j in range(len(sentences2)): score = sim_model.get_score(sentences1[i], sentences2[j]) print("{} \t\t {} \t\t Score: {:.4f}".format(sentences1[i], sentences2[j], score)) ------------------------------------------------------------------------------------------- 如何更换花呗绑定银行卡 花呗更改绑定银行卡 Score: 0.9477 如何更换花呗绑定银行卡 The dog plays in the garden Score: -0.1748 如何更换花呗绑定银行卡 A woman watches TV Score: -0.0839 如何更换花呗绑定银行卡 The new movie is so great Score: -0.0044 The cat sits outside 花呗更改绑定银行卡 Score: -0.0097 The cat sits outside The dog plays in the garden Score: 0.1908 The cat sits outside A woman watches TV Score: -0.0203 The cat sits outside The new movie is so great Score: 0.0302 A man is playing guitar 花呗更改绑定银行卡 Score: -0.0010 A man is playing guitar The dog plays in the garden Score: 0.1062 A man is playing guitar A woman watches TV Score: 0.0055 A man is playing guitar The new movie is so great Score: 0.0097 The new movie is awesome 花呗更改绑定银行卡 Score: 0.0302 The new movie is awesome The dog plays in the garden Score: -0.0160 The new movie is awesome A woman watches TV Score: 0.1321 The new movie is awesome The new movie is so great Score: 0.95911.3.3 文本匹配搜索(semantic_search)

一般是在文档候选集中找与query最相似的文本,常用于QA场景的问句相似匹配、文本相似检索等任务。可以使用text2vec包里的semantic_search。它默认使用的也是“shibing624/text2vec-base-chinese”模型。

import sys sys.path.append('..') from text2vec import SentenceModel, cos_sim, semantic_search embedder = SentenceModel() # Corpus with example sentences corpus = [ '花呗更改绑定银行卡', '我什么时候开通了花呗', 'A man is eating food.', 'A man is eating a piece of bread.', 'The girl is carrying a baby.', 'A man is riding a horse.', 'A woman is playing violin.', 'Two men pushed carts through the woods.', 'A man is riding a white horse on an enclosed ground.', 'A monkey is playing drums.', 'A cheetah is running behind its prey.' ] corpus_embeddings = embedder.encode(corpus) # Query sentences: queries = [ '如何更换花呗绑定银行卡', 'A man is eating pasta.', 'Someone in a gorilla costume is playing a set of drums.', 'A cheetah chases prey on across a field.'] for query in queries: query_embedding = embedder.encode(query) hits = semantic_search(query_embedding, corpus_embeddings, top_k=5) print("\n\n======================\n\n") print("Query:", query) print("\nTop 5 most similar sentences in corpus:") hits = hits[0] # Get the hits for the first query for hit in hits: print(corpus[hit['corpus_id']], "(Score: {:.4f})".format(hit['score'])) ------------------------------------------------------------------------------------- Query: 如何更换花呗绑定银行卡 Top 5 most similar sentences in corpus: 花呗更改绑定银行卡 (Score: 0.9477) 我什么时候开通了花呗 (Score: 0.3635) A man is eating food. (Score: 0.0321) A man is riding a horse. (Score: 0.0228) Two men pushed carts through the woods. (Score: 0.0090) ====================== Query: A man is eating pasta. Top 5 most similar sentences in corpus: A man is eating food. (Score: 0.6734) A man is eating a piece of bread. (Score: 0.4269) A man is riding a horse. (Score: 0.2086) A man is riding a white horse on an enclosed ground. (Score: 0.1020) A cheetah is running behind its prey. (Score: 0.0566) ====================== Query: Someone in a gorilla costume is playing a set of drums. Top 5 most similar sentences in corpus: A monkey is playing drums. (Score: 0.8167) A cheetah is running behind its prey. (Score: 0.2720) A woman is playing violin. (Score: 0.1721) A man is riding a horse. (Score: 0.1291) A man is riding a white horse on an enclosed ground. (Score: 0.1213) ====================== Query: A cheetah chases prey on across a field. Top 5 most similar sentences in corpus: A cheetah is running behind its prey. (Score: 0.9147) A monkey is playing drums. (Score: 0.2655) A man is riding a horse. (Score: 0.1933) A man is riding a white horse on an enclosed ground. (Score: 0.1733) A man is eating food. (Score: 0.0329)1.5 HuggingFace Transformers

可以直接用AutoModel, AutoTokenizer这种方式来使用在HuggingFace Hub发布的模型。它会自动去HuggingFace匹配和下载对应的模型(可惜,目前国内无法正常访问)。

import os import torch from transformers import AutoTokenizer, AutoModel os.environ["KMP_DUPLICATE_LIB_OK"] = "TRUE" # Mean Pooling - Take attention mask into account for correct averaging def mean_pooling(model_output, attention_mask): token_embeddings = model_output[0] # First element of model_output contains all token embeddings input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float() return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9) # Load model from HuggingFace Hub tokenizer = AutoTokenizer.from_pretrained('shibing624/text2vec-base-chinese') model = AutoModel.from_pretrained('shibing624/text2vec-base-chinese') sentences = ['如何更换花呗绑定银行卡', '花呗更改绑定银行卡'] # Tokenize sentences encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt') # Compute token embeddings with torch.no_grad(): model_output = model(**encoded_input) # Perform pooling. In this case, max pooling. sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask']) print("Sentence embeddings:") print(sentence_embeddings)二、基于BERT预训练模型+微调完成NLP主流任务

预训练模型基于新的自然语言处理任务范式:预训练+微调,极大推动了自然语言处理领域的发展。

基于这个新的训练范式,预训练模型可以被广泛应用于NLP领域的各项任务中。一般来讲,比较常见的经典NLP任务包括以下四类:

- 分类式任务:给定一串文本,判断该文本的类别标签

- 匹配式任务:对给定的两个文本判断其是否语义相似

- 问答式任务:给定问题和文档,要求从文档中抽取出问题的答案

- 序列标注式任务:给定一串文本,输出对应的标签序列

- 生成式任务:给定一串文本,同时要求模型输出一串文本

下面以文本匹配任务为例来说明预训练模型的使用和微调过程。

2.1 任务说明

文本匹配是自然语言处理领域基础的核心任务之一,其主要用于判断给定的两句文本是否语义相似。文本匹配技术具有广泛的应用场景,比如信息检索、问答系统,文本蕴含等场景。

例如,文本匹配技术可以用于判定以下三句话之间的语义相似关系:

- 苹果在什么时候成熟?

- 苹果一般在几月份成熟?

- 苹果手机什么时候可以买?

文本匹配技术期望能够使得计算机自动判定第1和第2句话是语义相似的,第1和第3句话,第2和第3句话之间是不相似的。

本节将基于PaddleNLP库中的BERT模型建模文本匹配任务,带领大家体验预训练+微调的训练新范式。由于PaddleNLP库中的BERT模型已经预训练过,因此本节将基于预训练后的BERT模型,在LCQMC数据集上微调BERT,建模文本匹配任务。

2.2 数据准备

LCQMC是百度知道领域的中文问题匹配数据集,该数据集是从不同领域的用户中提取出来。LCQMC的训练集的数量是 238766条,验证集的大小是4401条,测试集的大小是4401条。 下面展示了一条LCQMC数据集的样例,数据分为三列,前两列是判定语义相似的文本对,后一列是标签,其中1表示相似,0表示不相似。

什么花一年四季都开 什么花一年四季都是开的 1

大家觉得她好看吗 大家觉得跑男好看吗? 0

2.1.1 数据加载

由于LCQMC数据集已经集成到PaddleNLP中,因此本节我们将使用PaddleNLP内置的LCQMC数据集进行文本匹配任务。可以使用如下方式加载LCQMC数据集中的训练集、验证集和测试集,需要注意的是训练集和验证集是有标签的,测试集是没有标签的。

import os import paddle import paddle.nn as nn import paddle.nn.functional as F from paddle.utils.download import get_path_from_url from paddlenlp.datasets import load_dataset from paddlenlp.data import Pad, Stack, Tuple, Vocab # 加载 Lcqmc 的训练集、验证集 train_set, dev_set, test_set = load_dataset("lcqmc", splits=["train", "dev", "test"]) # 输出训练集的前 3 条样本 for idx, example in enumerate(train_set): if idx