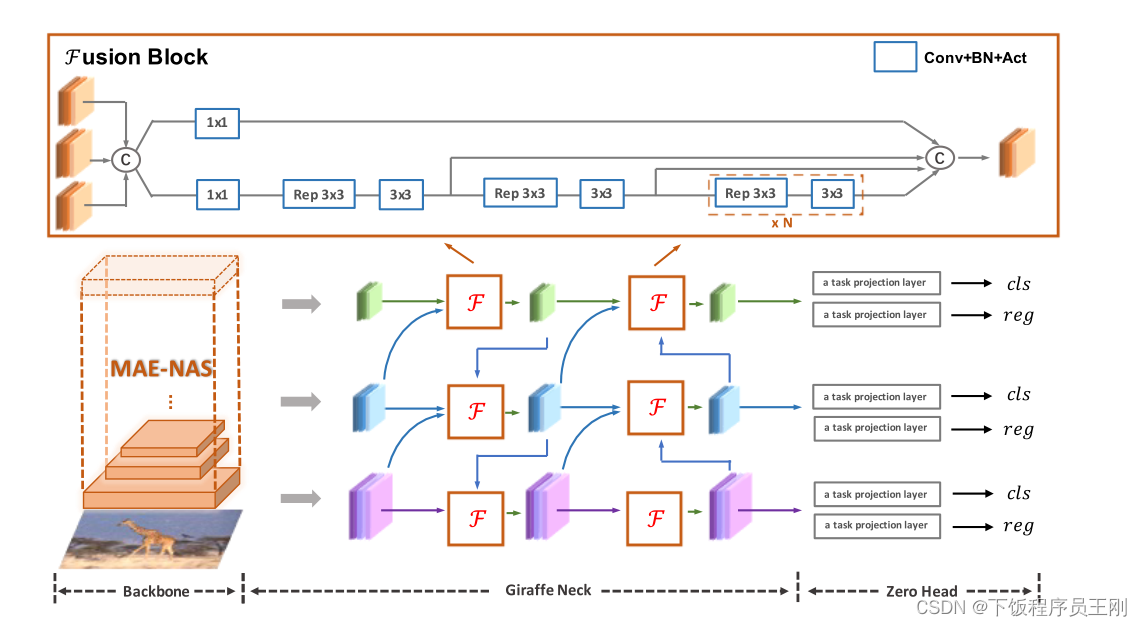

这个图是有点问题的,在GiraffeNeckV2代码中只有了5个Fusion Block(图中有6个)

https://Github.com/tinyvision/DAMO-YOLO/blob/master/damo/base_models/necks/giraffe_fpn_btn.py

代码中只有5个CSPStage

所以我自己画了一个总体图,在github上提了个issue,得到了原作者的肯定

I think the pictures in your paper are not rigorous in several places · Issue #91 · tinyvision/DAMO-YOLO · GitHub

想要看懂Neck部分,只需要看懂Fusion Block在做什么就行了,其他部分和PAN差不太多

class CSPStage(nn.Module):

def __init__(self,

block_fn,

ch_in,

ch_hidden_ratio,

ch_out,

n,

act='swish',

spp=False):

super(CSPStage, self).__init__()

split_ratio = 2

ch_first = int(ch_out // split_ratio)

ch_mid = int(ch_out - ch_first)

self.conv1 = ConvBNAct(ch_in, ch_first, 1, act=act)

self.conv2 = ConvBNAct(ch_in, ch_mid, 1, act=act)

self.convs = nn.Sequential()

next_ch_in = ch_mid

for i in range(n):

if block_fn == 'BasicBlock_3x3_Reverse':

self.convs.add_module(

str(i),

BasicBlock_3x3_Reverse(next_ch_in,

ch_hidden_ratio,

ch_mid,

act=act,

shortcut=True))

else:

raise NotImplementedError

if i == (n - 1) // 2 and spp:

self.convs.add_module(

'spp', SPP(ch_mid * 4, ch_mid, 1, [5, 9, 13], act=act))

next_ch_in = ch_mid

self.conv3 = ConvBNAct(ch_mid * n + ch_first, ch_out, 1, act=act)

def forward(self, x):

y1 = self.conv1(x)

y2 = self.conv2(x)

mid_out = [y1]

for conv in self.convs:

y2 = conv(y2)

mid_out.append(y2)

y = torch.cat(mid_out, axis=1)

y = self.conv3(y)

return y

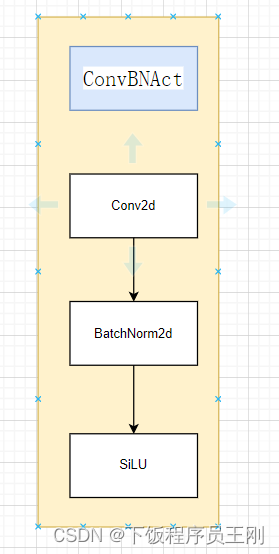

以上是CSPStage的代码,要想看懂,我们得先看懂ConvBNAct、BasicBlock_3x3_Reverse这两个类

class ConvBNAct(nn.Module):

"""A Conv2d -> Batchnorm -> silu/leaky relu block"""

def __init__(

self,

in_channels,

out_channels,

ksize,

stride=1,

groups=1,

bias=False,

act='silu',

norm='bn',

reparam=False,

):

super().__init__()

# same padding

pad = (ksize - 1) // 2

self.conv = nn.Conv2d(

in_channels,

out_channels,

kernel_size=ksize,

stride=stride,

padding=pad,

groups=groups,

bias=bias,

)

if norm is not None:

self.bn = get_norm(norm, out_channels, inplace=True)

if act is not None:

self.act = get_activation(act, inplace=True)

self.with_norm = norm is not None

self.with_act = act is not None

def forward(self, x):

x = self.conv(x)

if self.with_norm:

x = self.bn(x)

if self.with_act:

x = self.act(x)

return x

def fuseforward(self, x):

return self.act(self.conv(x))

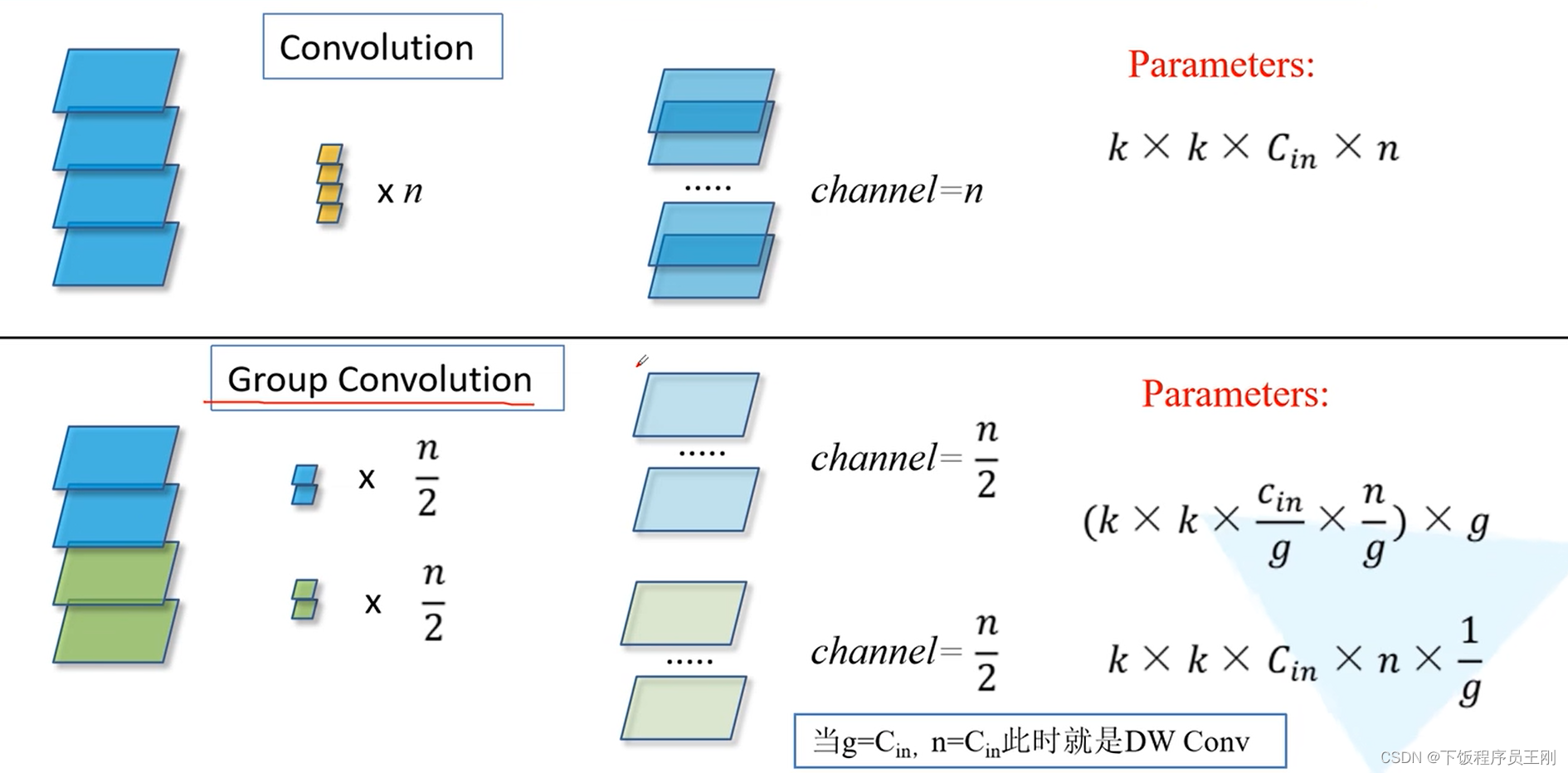

ConvBNAct还是很好看懂的,Conv +BN + SiLU就完事了(也可用别的激活函数,文章用SiLU)

如果设置了groups参数就变成了组卷积了

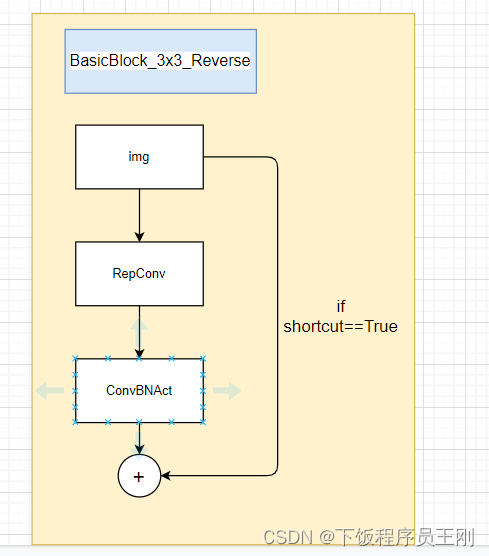

class BasicBlock_3x3_Reverse(nn.Module):

def __init__(self,

ch_in,

ch_hidden_ratio,

ch_out,

act='relu',

shortcut=True):

super(BasicBlock_3x3_Reverse, self).__init__()

assert ch_in == ch_out

ch_hidden = int(ch_in * ch_hidden_ratio)

self.conv1 = ConvBNAct(ch_hidden, ch_out, 3, stride=1, act=act)

self.conv2 = RepConv(ch_in, ch_hidden, 3, stride=1, act=act)

self.shortcut = shortcut

def forward(self, x):

y = self.conv2(x)

y = self.conv1(y)

if self.shortcut:

return x + y

else:

return y

要看懂BasicBlock_3x3_Reverse这个类,就得了解RepConv类,这个类就是根据RepVGG网络的RepVGGBlock改的

class RepConv(nn.Module):

'''RepConv is a basic rep-style block, including training and deploy status

Code is based on https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py

'''

def __init__(self,

in_channels,

out_channels,

kernel_size=3,

stride=1,

padding=1,

dilation=1,

groups=1,

padding_mode='zeros',

deploy=False,

act='relu',

norm=None):

super(RepConv, self).__init__()

self.deploy = deploy

self.groups = groups

self.in_channels = in_channels

self.out_channels = out_channels

assert kernel_size == 3

assert padding == 1

padding_11 = padding - kernel_size // 2

if isinstance(act, str):

self.nonlinearity = get_activation(act)

else:

self.nonlinearity = act

if deploy:

self.rbr_reparam = nn.Conv2d(in_channels=in_channels,

out_channels=out_channels,

kernel_size=kernel_size,

stride=stride,

padding=padding,

dilation=dilation,

groups=groups,

bias=True,

padding_mode=padding_mode)

else:

self.rbr_idEntity = None

self.rbr_dense = conv_bn(in_channels=in_channels,

out_channels=out_channels,

kernel_size=kernel_size,

stride=stride,

padding=padding,

groups=groups)

self.rbr_1x1 = conv_bn(in_channels=in_channels,

out_channels=out_channels,

kernel_size=1,

stride=stride,

padding=padding_11,

groups=groups)

def forward(self, inputs):

'''Forward process'''

if hasattr(self, 'rbr_reparam'):

return self.nonlinearity(self.rbr_reparam(inputs))

if self.rbr_identity is None:

id_out = 0

else:

id_out = self.rbr_identity(inputs)

return self.nonlinearity(

self.rbr_dense(inputs) + self.rbr_1x1(inputs) + id_out)

def get_equivalent_kernel_bias(self):

kernel3x3, bias3x3 = self._fuse_bn_tensor(self.rbr_dense)

kernel1x1, bias1x1 = self._fuse_bn_tensor(self.rbr_1x1)

kernelid, biasid = self._fuse_bn_tensor(self.rbr_identity)

return kernel3x3 + self._pad_1x1_to_3x3_tensor(

kernel1x1) + kernelid, bias3x3 + bias1x1 + biasid

def _pad_1x1_to_3x3_tensor(self, kernel1x1):

if kernel1x1 is None:

return 0

else:

return torch.nn.functional.pad(kernel1x1, [1, 1, 1, 1])

def _fuse_bn_tensor(self, branch):

if branch is None:

return 0, 0

if isinstance(branch, nn.Sequential):

kernel = branch.conv.weight

running_mean = branch.bn.running_mean

running_var = branch.bn.running_var

gamma = branch.bn.weight

beta = branch.bn.bias

eps = branch.bn.eps

else:

assert isinstance(branch, nn.BatchNorm2d)

if not hasattr(self, 'id_tensor'):

input_dim = self.in_channels // self.groups

kernel_value = np.zeros((self.in_channels, input_dim, 3, 3),

dtype=np.float32)

for i in range(self.in_channels):

kernel_value[i, i % input_dim, 1, 1] = 1

self.id_tensor = torch.from_numpy(kernel_value).to(

branch.weight.device)

kernel = self.id_tensor

running_mean = branch.running_mean

running_var = branch.running_var

gamma = branch.weight

beta = branch.bias

eps = branch.eps

std = (running_var + eps).sqrt()

t = (gamma / std).reshape(-1, 1, 1, 1)

return kernel * t, beta - running_mean * gamma / std

def switch_to_deploy(self):

if hasattr(self, 'rbr_reparam'):

return

kernel, bias = self.get_equivalent_kernel_bias()

self.rbr_reparam = nn.Conv2d(

in_channels=self.rbr_dense.conv.in_channels,

out_channels=self.rbr_dense.conv.out_channels,

kernel_size=self.rbr_dense.conv.kernel_size,

stride=self.rbr_dense.conv.stride,

padding=self.rbr_dense.conv.padding,

dilation=self.rbr_dense.conv.dilation,

groups=self.rbr_dense.conv.groups,

bias=True)

self.rbr_reparam.weight.data = kernel

self.rbr_reparam.bias.data = bias

for para in self.parameters():

para.detach_()

self.__delattr__('rbr_dense')

self.__delattr__('rbr_1x1')

if hasattr(self, 'rbr_identity'):

self.__delattr__('rbr_identity')

if hasattr(self, 'id_tensor'):

self.__delattr__('id_tensor')

self.deploy = True

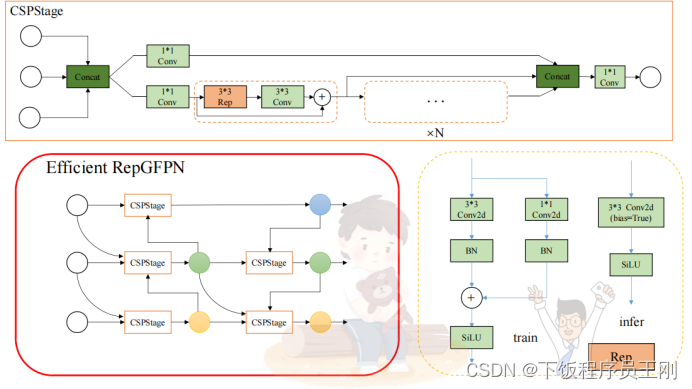

RepConv的特点是结构重参数化,训练时采用三条分支,推理时将三个分支融合在一起,大大减少了推理时间(建议看看RepVGG的讲解视频),我图画得太丑了

RepConv采用的两分支的结构(a)

其他细节有缘再更,代码不难,慢慢看完全能懂。有写的不对的地方请见谅